Artificial intelligence is no longer a futuristic concept — it’s a driving force behind today’s most innovative applications. But as more businesses build AI-powered solutions, one thing becomes clear: traditional software architectures aren’t enough.

The demands of AI development — from real-time processing to large model inference and continuous learning — require a new kind of infrastructure. In this blog, we’ll explore why the old ways fall short, what modern AI architecture looks like, and how it can power the next generation of intelligent applications.

Conventional software systems were built for static workflows. You design a feature, deploy it, and maintain it. AI development, on the other hand, is dynamic and data-driven — it requires continuous iteration and model tuning.

Some key limitations of legacy or monolithic architectures in AI contexts:

Difficulty scaling with large datasets and model inference loads

Inflexible pipelines that slow down deployment and retraining

Poor separation between training, inference, and user interfaces

Lack of support for real-time updates and feedback loops

For example, many teams attempting to integrate large language models (LLMs) into legacy web apps hit major bottlenecks in model serving, latency, or version control. The architecture simply wasn’t designed to support these workflows.

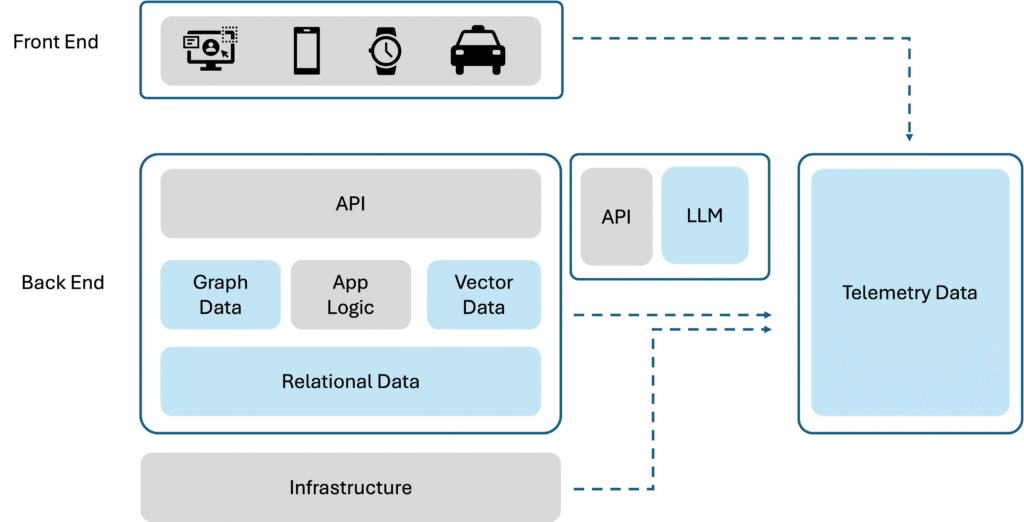

Modern AI architecture solves these challenges by separating concerns and optimizing for modularity, flexibility, and performance.

The new standard includes:

Modular Services: Different components for training, inference, data ingestion, and monitoring

Cloud-Native Infrastructure: Auto-scaling compute, containerized environments, and managed services like AWS SageMaker or Vertex AI

Event-Driven Pipelines: Use of Kafka, Airflow, or similar tools to orchestrate data flow

Model-Centric Layers: Separate environments for experimenting, testing, and deploying machine learning models

Companies like OpenAI and Hugging Face have popularized this pattern, where models, data, and product features evolve independently — allowing teams to innovate faster and manage complexity at scale.

To build robust, production-grade AI systems, the following components are essential:

Inference Engine – optimized for low-latency model serving (e.g., NVIDIA Triton, BentoML)

Model Lifecycle Management – track, version, and deploy ML models with tools like MLflow or Weights & Biases

Observability Layer – monitor drift, latency, and performance using tools like Arize or Aporia

Data Pipeline – clean, transform, and stream data into the right format for models

APIs & Integration Layer – seamless connection with your front end or external tools

These pieces work together to deliver real-time, scalable, and intelligent behavior — without compromising on performance or reliability.

Adopting a modern architecture isn’t just a technical decision — it’s a strategic move. Here’s what it unlocks:

Speed: Deploy updates and models faster with automated CI/CD for ML

Scale: Handle millions of predictions with horizontal scaling

Cost-Efficiency: Use the right resources for the right task — reduce waste

Collaboration: Dev, data, and product teams can iterate independently

Reliability: Detect and recover from model failures without human intervention

In short, better architecture leads to better products.

A mid-sized SaaS company building a recommendation engine was struggling with latency issues, frequent downtime, and delayed model updates.

After migrating to a microservices-based AI architecture using FastAPI, MLflow, and Kubernetes, they reduced model deployment time from weeks to days and improved system uptime by 35%. Their team now experiments and releases new models without breaking the core app — and customers are seeing better, faster recommendations.

Ask yourself:

Are models taking too long to deploy or retrain?

Is your AI workflow breaking down at scale?

Are developers and data scientists constantly stepping on each other’s toes?

Do you lack visibility into model performance in production?

If the answer is yes to any of the above — it’s time to rethink your AI app architecture.

As AI continues to transform industries, building intelligent applications will demand more than just smart algorithms. It will require smart architecture — systems that are adaptable, modular, observable, and built for the realities of AI at scale.

Whether you’re launching a new AI product or scaling an existing one, the foundation you build on will define your speed, agility, and success.

At ReNewator, we specialize in building AI-powered platforms from the ground up — with infrastructure that scales and evolves with your business. From custom LLM integration to real-time machine learning apps, our team helps you move from idea to impact — faster.

Let’s talk about building smarter.

Q1: What is AI application architecture?

AI architecture refers to the technical design and structure of systems that support the development, deployment, and scaling of AI models and applications.

Q2: Why is architecture important for AI apps?

Because AI systems require constant data flow, model updates, and high compute loads. Without the right structure, they can’t scale or perform reliably.

Q3: Can I use traditional backend frameworks for AI?

Only to a limited extent. AI applications demand specialized infrastructure for inference, model management, and data streaming.

Q4: What tools help with AI observability?

Some leading tools include Arize, Aporia, and WhyLabs.

Q5: When should I consider re-architecting my AI app?

If you’re struggling with latency, versioning, model failures, or deployment bottlenecks — it’s time to modernize your stack.